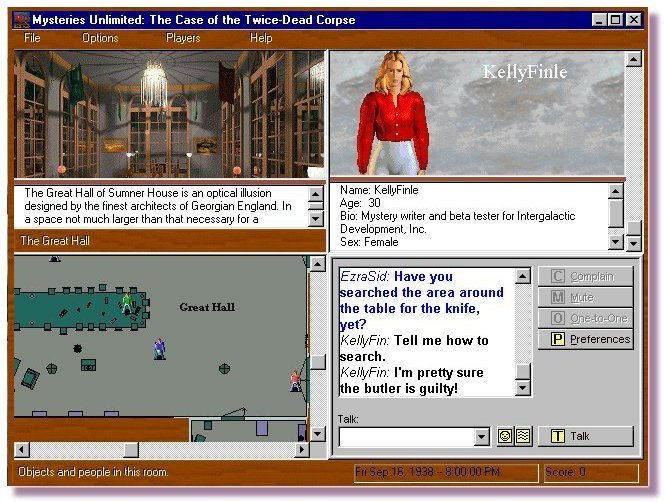

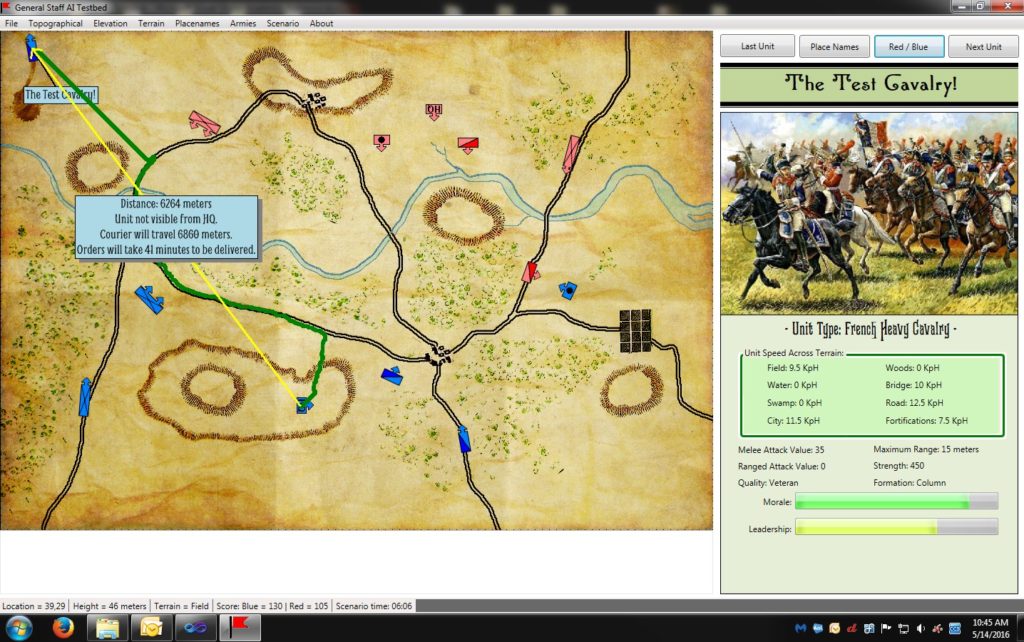

An amazing screen capture of the AI’s solution to a problem. It has found a 1 pixel gap between the data and the edge of the screen and is exploiting it to successfully find an ‘open flank’ of Red. Click to enlarge.

Professor Alberto M. Segre was my thesis advisor and one day he said to me, “You know when your AI is really working because it will surprise you.” Today I got to have one of those weird surprises.

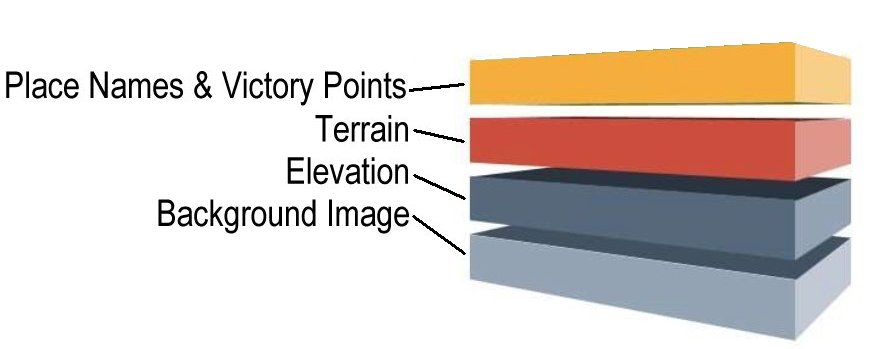

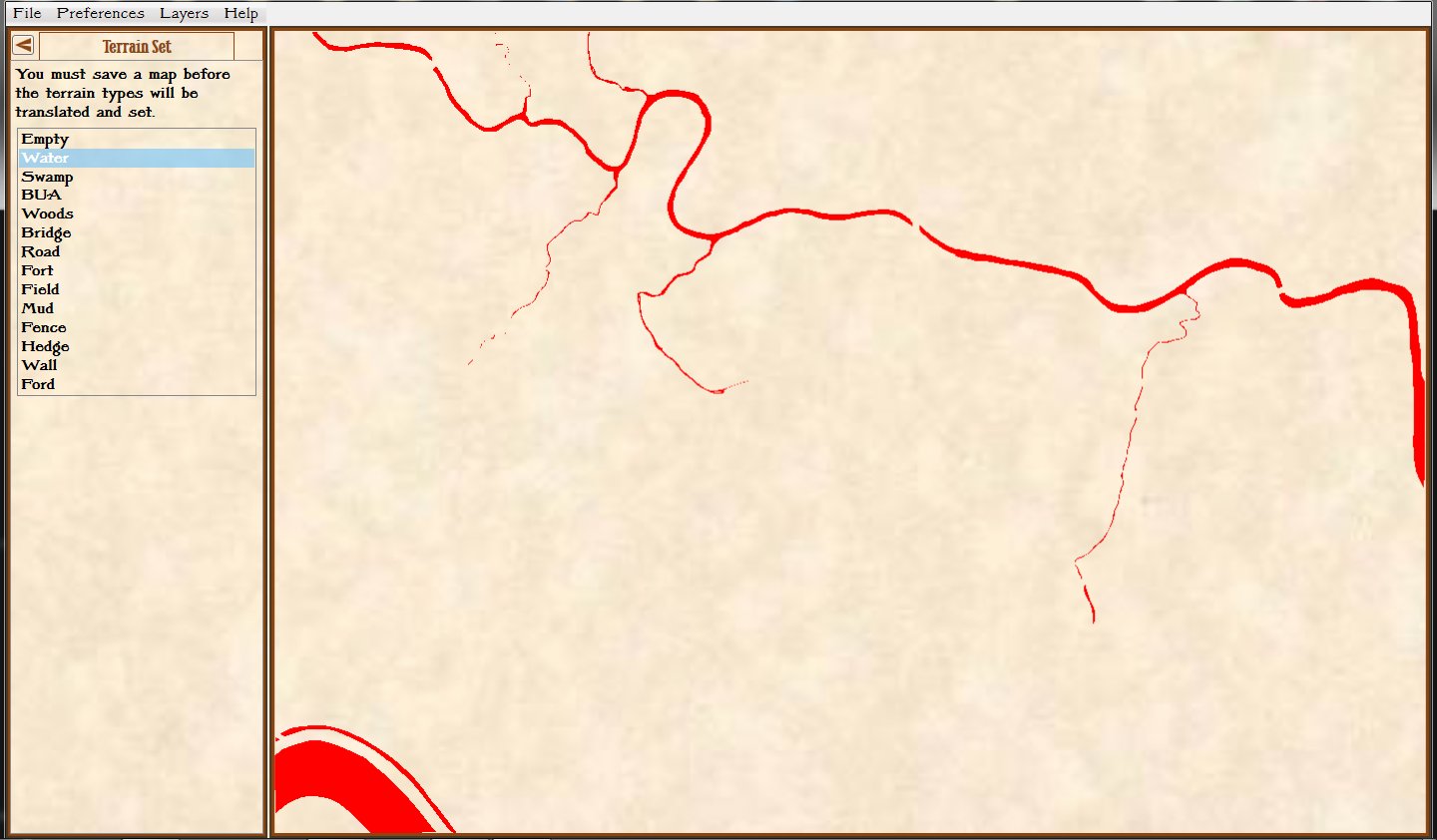

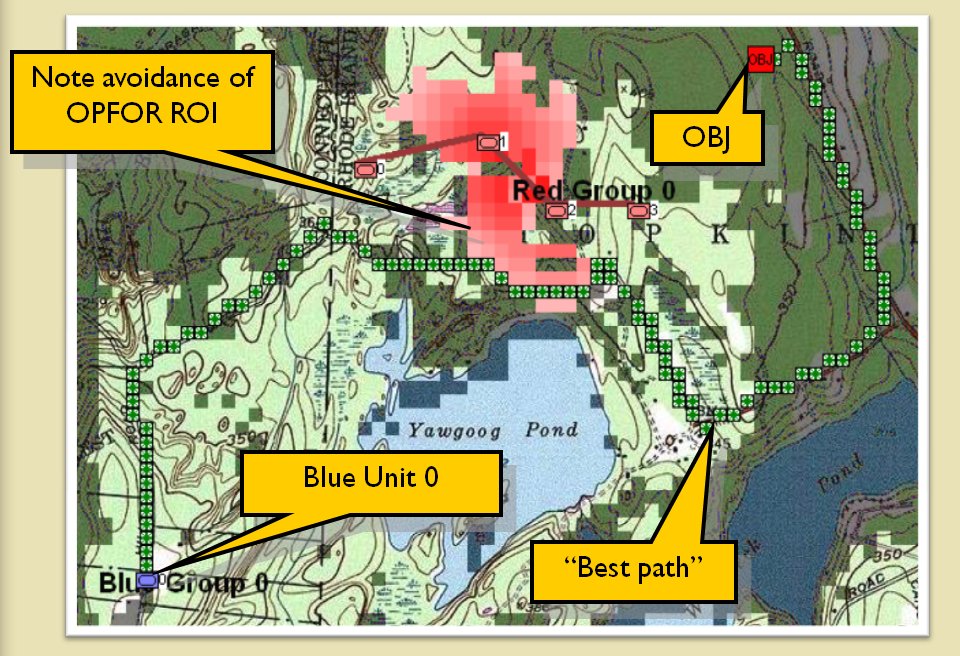

The screen shot (above) is a visual representation of what the AI is up to. You won’t get to see this in the actual game. The program that’s running is called the AI Editor which is a bit of a misnomer because you don’t actually edit the AI in it; you mostly just get to observe what it’s doing. There’s a lot of stuff going on in the above image. There are multiple layers visually displaying different types of data (check out the blog – Layers: Why a Military Simulation is Like a Parfait – for more information about these). But, what interests us are the AI layers: Battle Groups, Objectives, and that thin yellow line that snakes from a group of blue units, crossing Antietam Creek at the Middle Bridge and then, amazingly, exploiting a data anomaly to reach its goal: a point far behind enemy lines.

Some background on the situation:

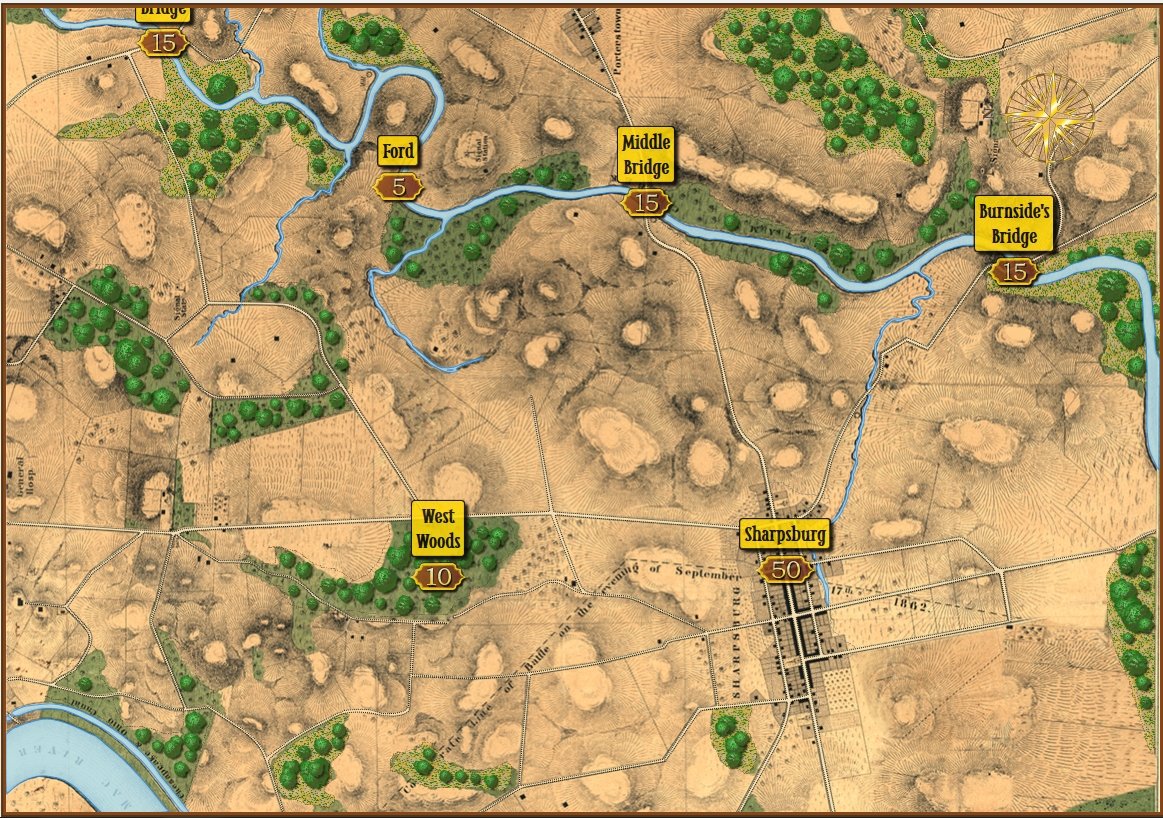

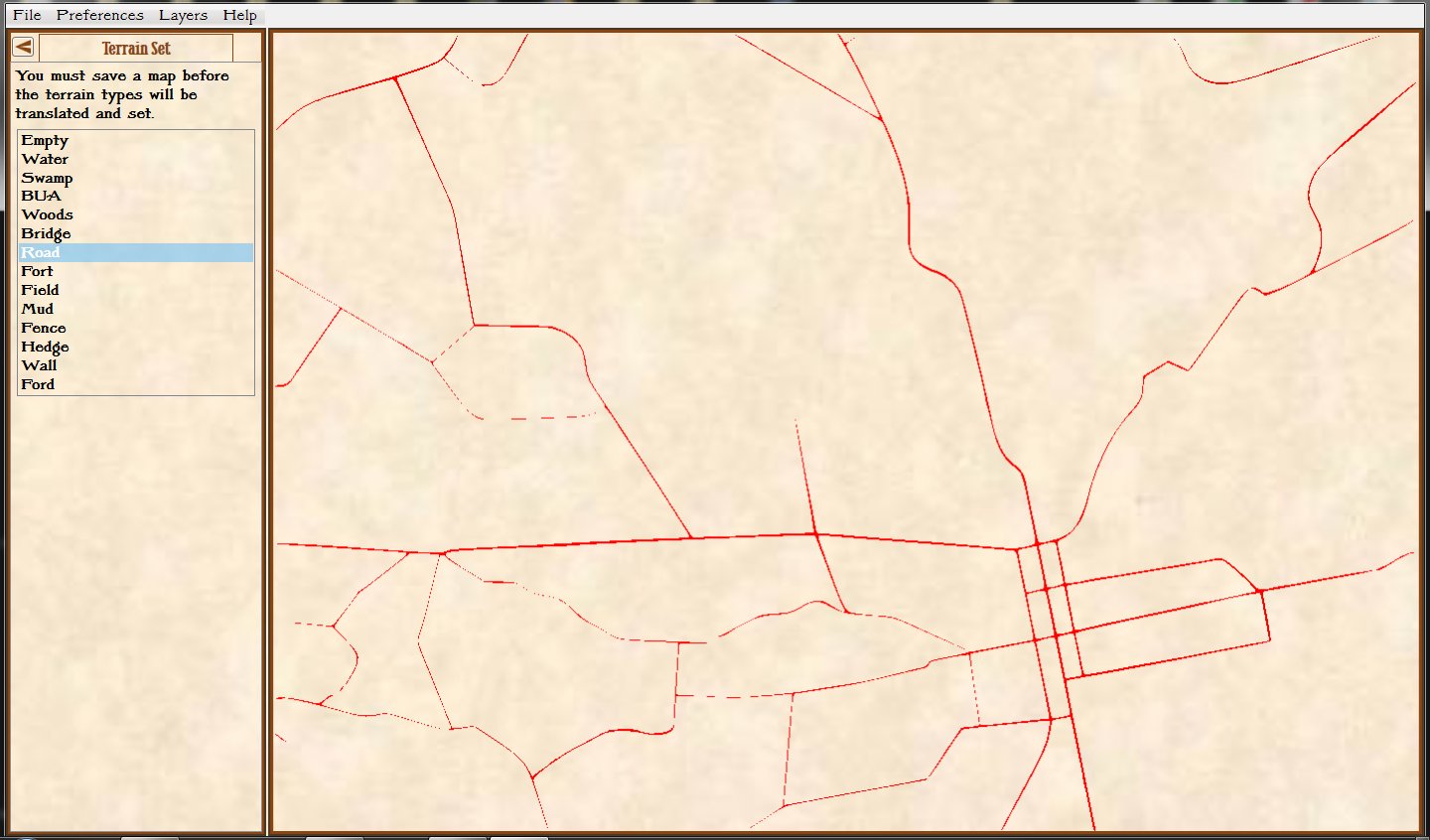

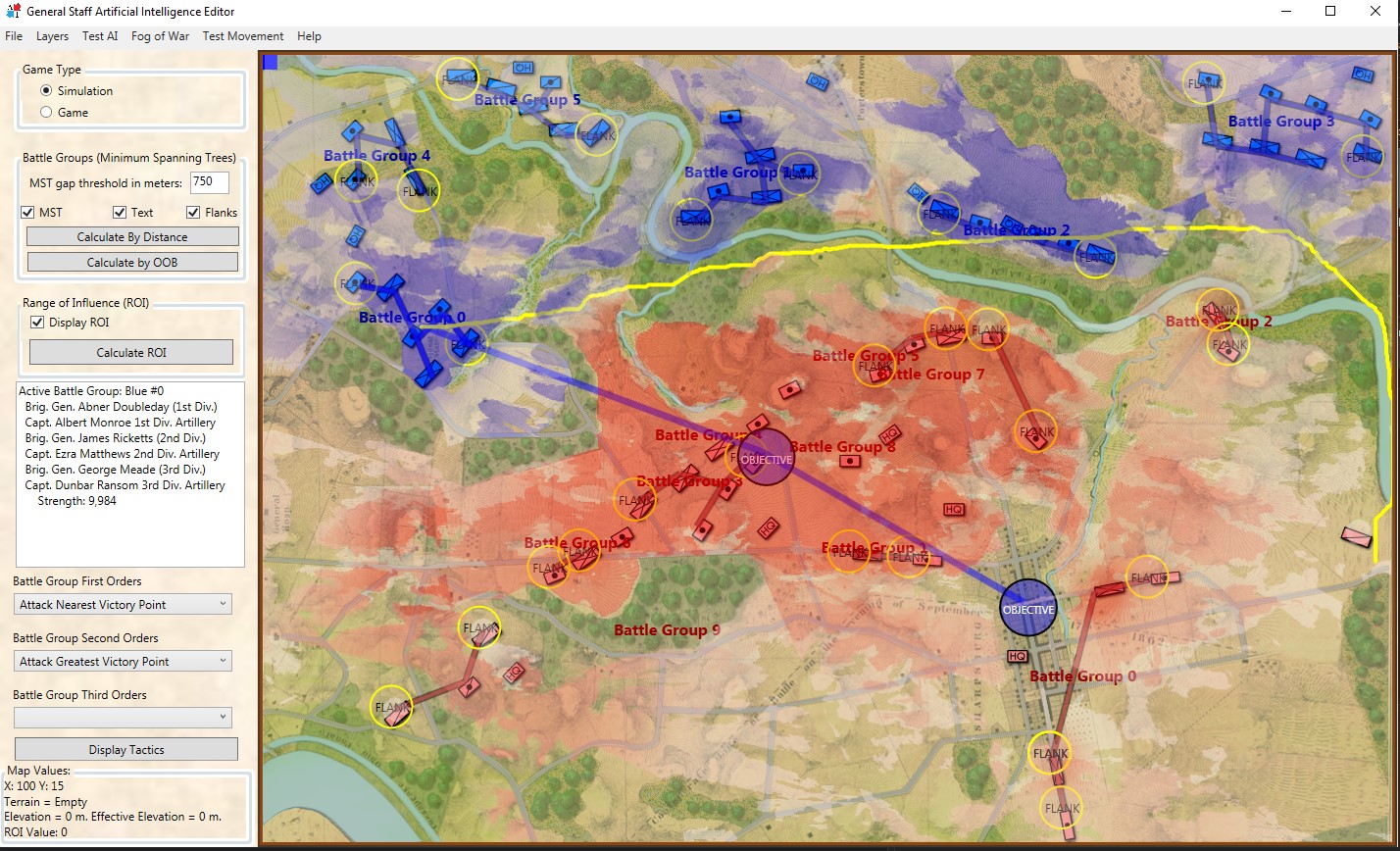

The map of the Antietam Battlefield (screen shot) with terrain and elevation layers displayed. Click to enlarge.

Underlying all the clutter from the first screen capture, top, is the battle of Antietam (above). The map has been rotated 90 degrees to the left so north is now pointing to the left; east is at the top of the screen.

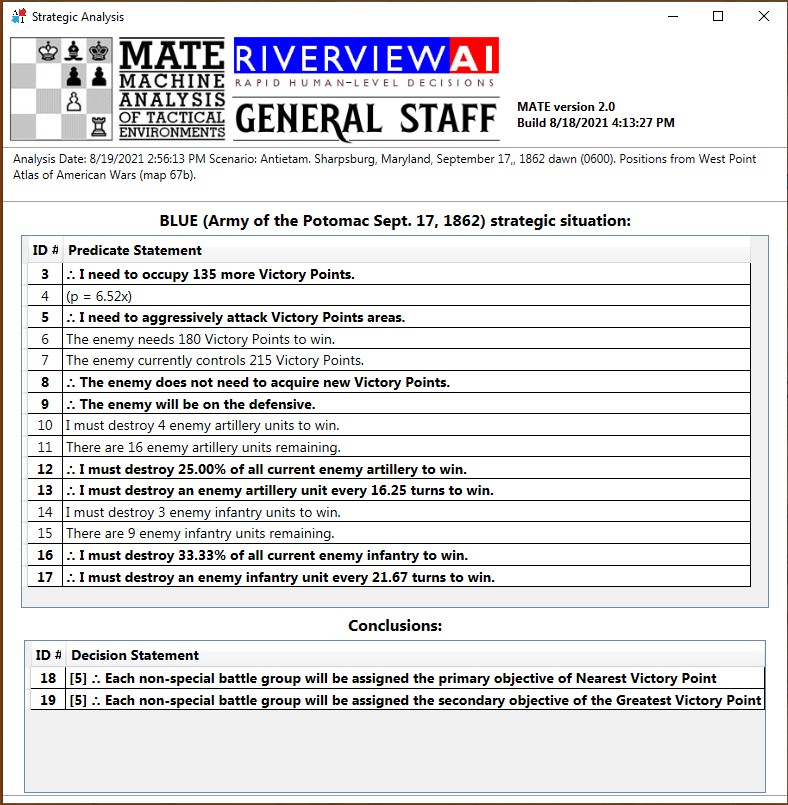

After adding Blue (Union) and Red (Confederate) units to the map in their historical positions at 0600 September 17, 1862 the AI performed a tactical analysis from the perspective of Blue.

The above are a list of Predicate Statements all of which the AI knows to be true. Statements preceded by the logical sign ∴ (therefore) are conclusions, or inferences, derived from the predicate statement referenced in the brackets. It is this analysis that determines if the AI will be on the offensive or defensive and what its objectives will be.

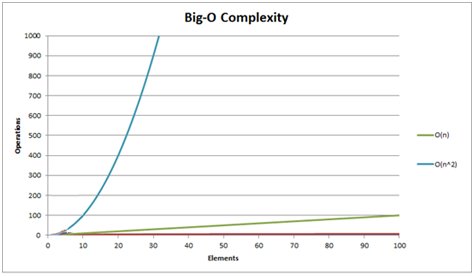

Next, the AI performs Range of Influence (ROI) calculations for the entire observable battlefield. I plan on doing a video about this later, but for now the darker the red (in the topmost screen capture) the more – and more powerful – weapons the Red army can bear on that point. The AI next divides all the units on the map into a forest of minimum spanning trees called Battle Groups. I want to do a video about this, too. However, if you can’t wait, these subjects are covered in my paper, Implementing the Five Canonical Offensive Maneuvers in a CGF Environment (free download).

Again, referring to the top screenshot you can see the AI’s calculations to this point:

- It has determined it (Blue) will be on the offensive.

- It has calculated enemy ROI.

- It has assigned objectives to the first Battle Group.

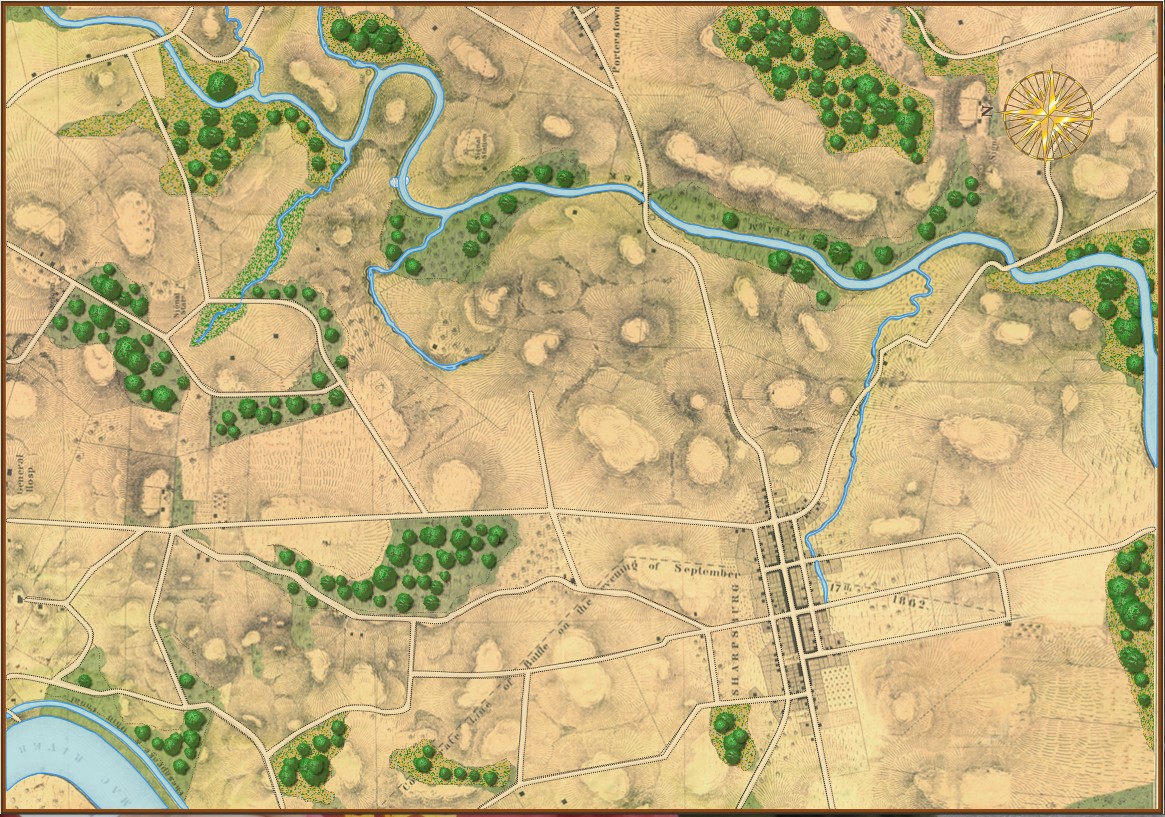

Flanking Algorithm published in, “Algorithms for Generating Attribute Values for the Classification of Tactical Situations”. Click to enlarge.

Now the AI needs to determine if the enemy has an ‘open or unanchored flank’. In Algorithms for Generating Attribute Values for the Classification of Tactical Situations I published the Algorithm for Flanking Attribute Value Function (right). It basically comes down to this: can the AI trace an unbroken path from the center of the Blue Battle Group to a specific point (called the Retreat Point) far behind enemy lines without crossing into ‘No Go Areas’ (water, swamp) or entering any area controlled by Red’s ROI (literally the red areas in the topmost screen shot).

The reason that I was using Antietam as a test case for anchored / unanchored flanks is because years ago I had analyzed the battle for my doctoral thesis and knew it to be a classic example of anchored flanks; Lee’s left flank rests on the Potomac and his right flank is anchored on the Antietam. Granted, the Confederate flanks were held by Stuart’s cavalry with a little horse artillery support but they were still, by definition, anchored flanks.

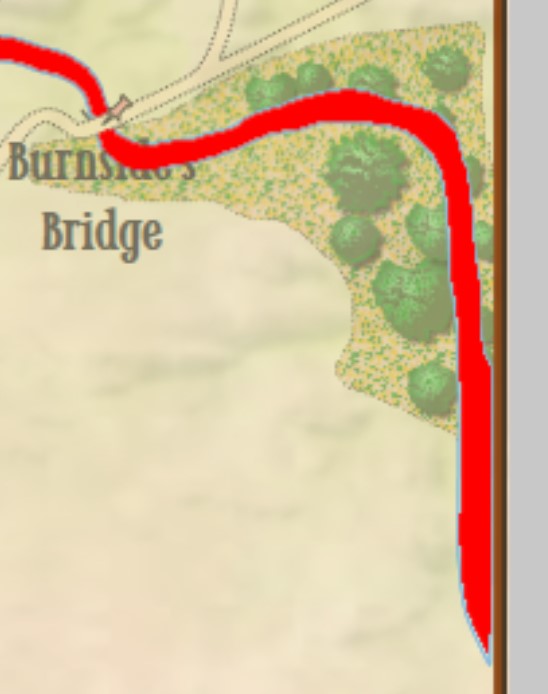

Due to an error in the data that made up the Antietam terrain map a 1 pixel (about 3.8 meter wide) strip of ‘no terrain’ was inserted at the far right hand edge of the map (see blow up of screen capture, right; it’s the thin line between the water, represented in red, and the brown edge of the map). This meant there was a ‘land bridge’ across Antietam Creek where none existed in real life. A digital parting of the Red Sea, if you will. But, by the rules of the game the AI perfectly performed its function. There was no error in the AI – again, the AI performed better than I had dared hope – the error was with the data set.

Due to an error in the data that made up the Antietam terrain map a 1 pixel (about 3.8 meter wide) strip of ‘no terrain’ was inserted at the far right hand edge of the map (see blow up of screen capture, right; it’s the thin line between the water, represented in red, and the brown edge of the map). This meant there was a ‘land bridge’ across Antietam Creek where none existed in real life. A digital parting of the Red Sea, if you will. But, by the rules of the game the AI perfectly performed its function. There was no error in the AI – again, the AI performed better than I had dared hope – the error was with the data set.

And that’s how fifty years from now I can see a cyber-detective standing over the chalk marks around a body saying, “Yeah, the machine performed perfectly, brilliantly, in fact. But, the error in the data set killed him.”

It’s already happened in real life. For cars with autopilot the data set of the world in which it operates is crucial. However, “against a bright spring sky, the car’s sensors system failed to distinguish a large white 18-wheel truck and trailer crossing the highway, Tesla said. The car attempted to drive full speed under the trailer, “with the bottom of the trailer impacting the windshield of the Model S”, Tesla said.” The driver died. The AI functioned perfectly. But, the error in the data set killed him.

So, I fixed the error in the data set (probably caused by not using the right values in InkScape when I converted the Antietam Water.bmp into paths) and imported it back into the Antietam map using the General Staff Map Editor, saved it out, and ran the AI Editor again and saw this:

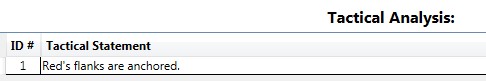

The AI did not display a yellow path from the center of the Blue Battle Group to the Red Retreat Point because none existed. Instead, it just wrote the first Predicate Statement in the Tactical Analysis stack: “Red’s flanks are anchored”.

The AI did not display a yellow path from the center of the Blue Battle Group to the Red Retreat Point because none existed. Instead, it just wrote the first Predicate Statement in the Tactical Analysis stack: “Red’s flanks are anchored”.

Again, the machine was performing perfectly. And its results were no longer surprising.

Addendum

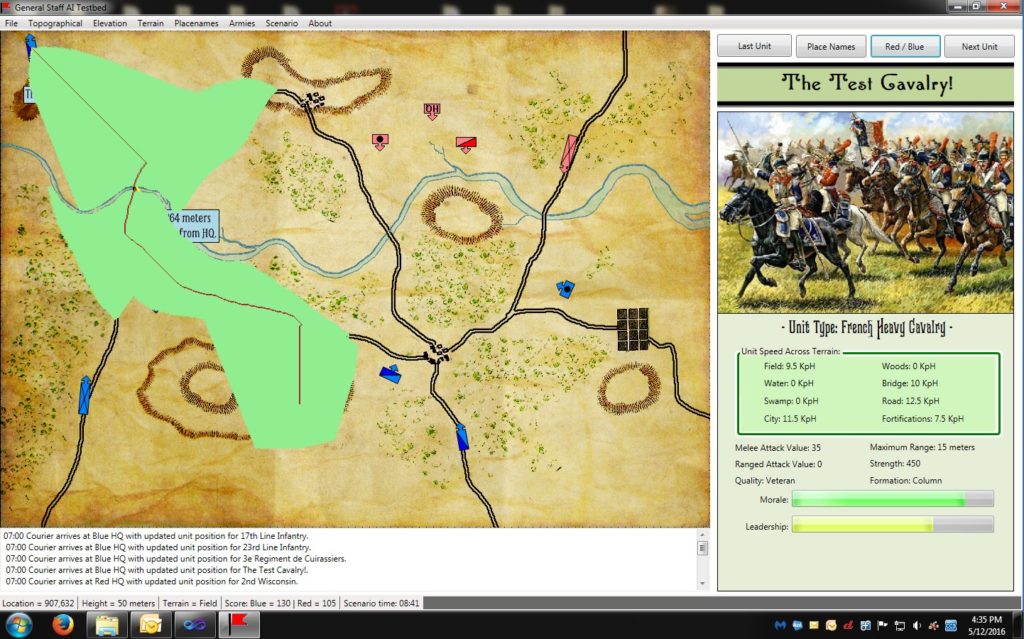

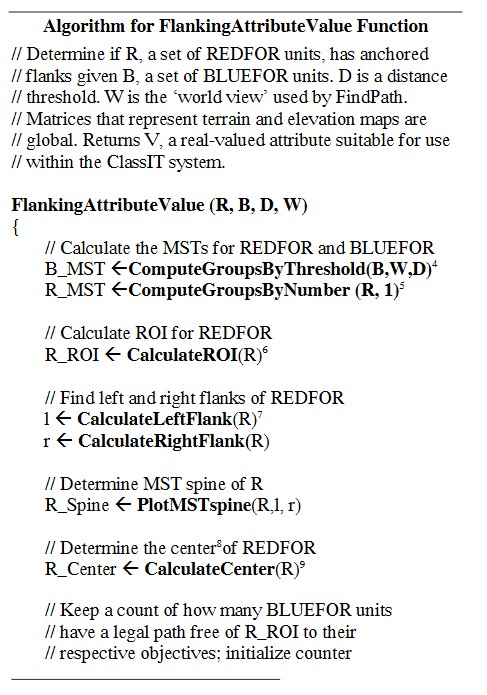

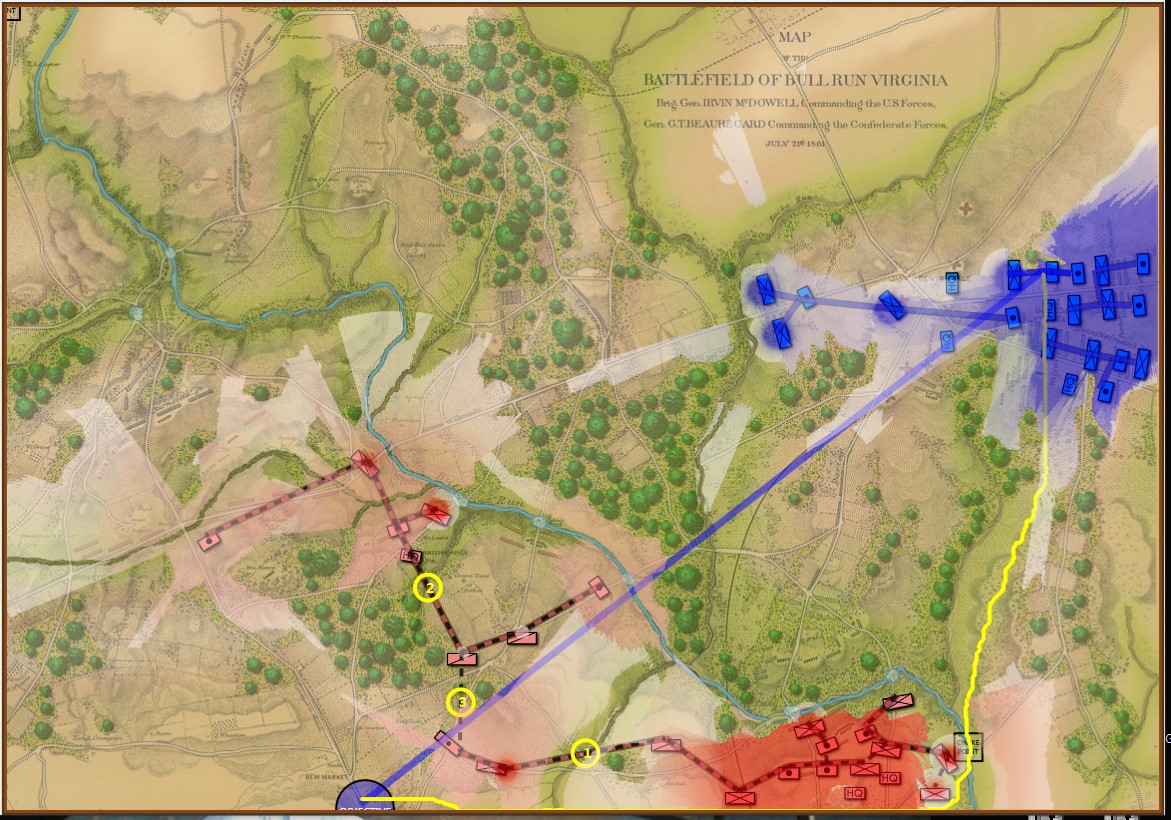

I recently got to experience this again (though this time it was caused by a different data bug) when I was reviewing the AI’s decisions at the battle of Manassas:

Because the Range of Influence was not calculating the very bottom row the AI found another, perfectly legal, way to reach its goal. Screen shot from the General Staff AI Editor. Click to enlarge.

In this instance, the error in the database was caused by the Range of Influence (basically a map of what red and blue can see and hit) not calculating the very last row. Consequently, the AI was able to legally trace a path from the blue forces in the northeast to their goal at the bottom of the map.

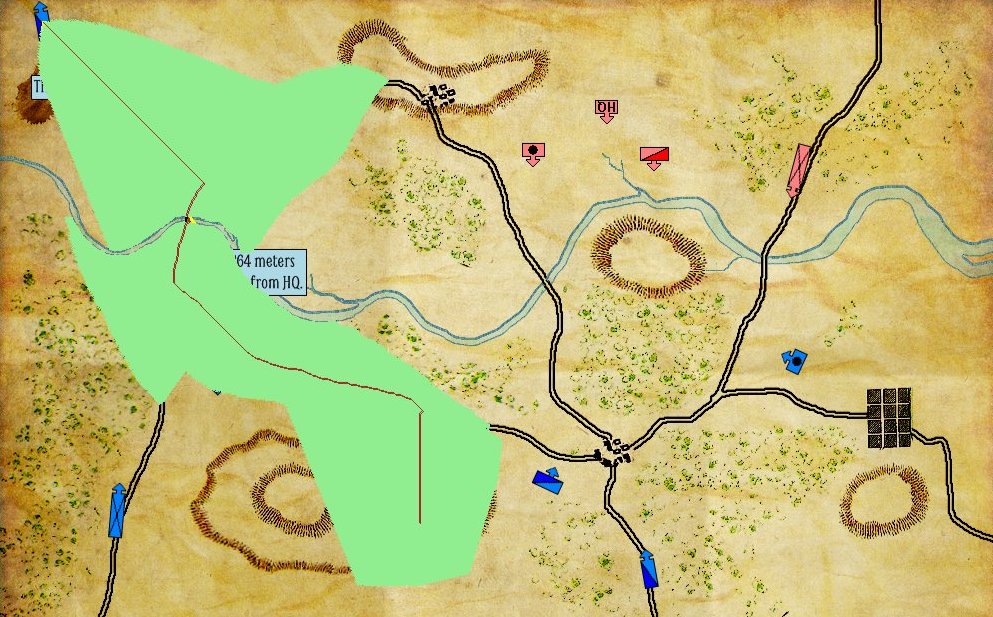

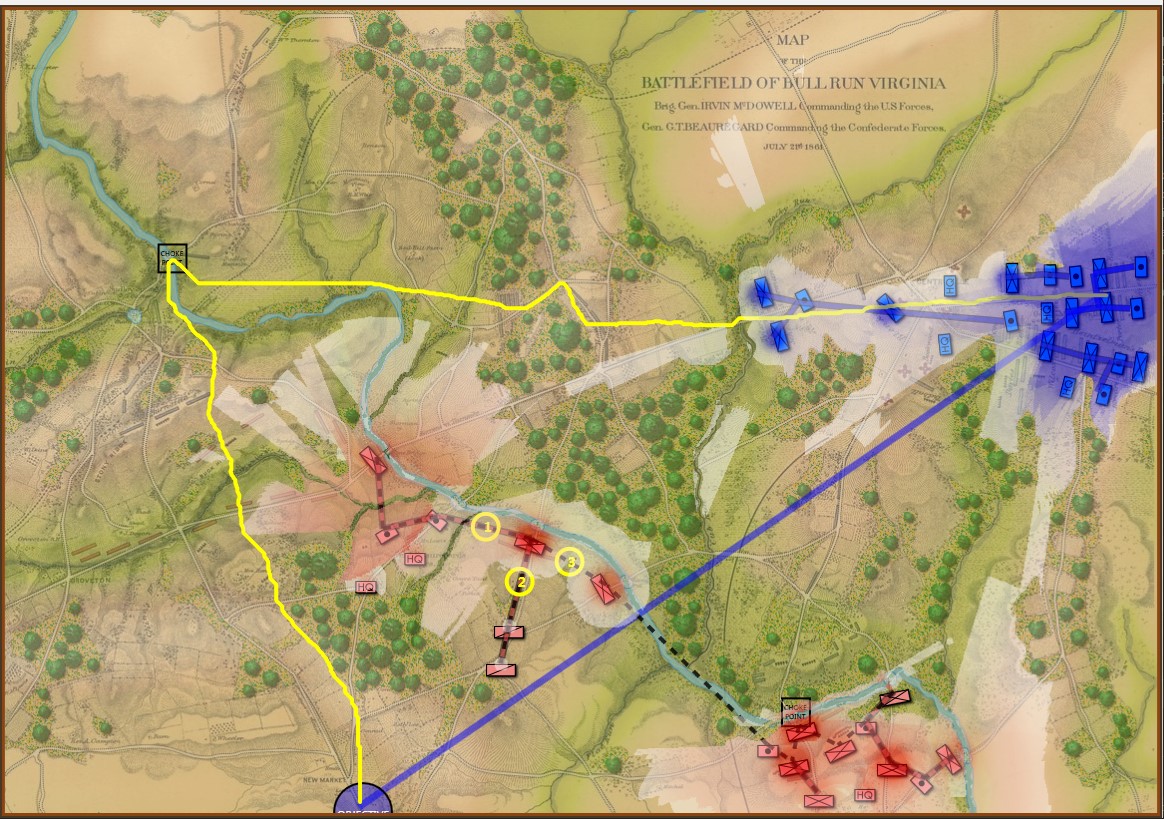

After this bug was corrected the AI performed as expected:

The AI correctly sees going around red’s left flank as the solution to the problem. Screen shot from General Staff AI Engine. Click to enlarge

In the above screen shot the AI has demonstrated the correct solution to the tactical problem facing blue at Manassas on July 20, 1861 (the day before the actual battle). Red’s left flank is unanchored. It’s wide open. Note how the AI identifies the one choke point (Sudley Springs Ford) in the plan.

So, the AI surprised me again. I think it’s looking pretty good. When you play against it, watch your flanks.