I have a lot of respect for Steve Wozniak – quite a bit less for Elon Musk 1)Though I have to admit losing $20 billion in a few months is impressive. – who both recently signed a letter calling for, “all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4.” Woz is a true computer hardware pioneer; but he’s certainly not an AI expert and Elon, well, I’m not sure where his expertise lies, but it’s not AI.

When it comes to creating AI capable of commanding troops on a battlefield, I am probably one of the world’s top experts on the subject (it’s not a crowded field). I’ve been writing and studying ‘computational military reasoning’ for my entire professional career, it was the subject of my doctoral thesis, I’ve written AI for numerous computer wargames and I’ve been a Principal Investigator for DARPA (Defense Advanced Research Project Agency) on this very subject.

I am confident in stating that no humans have been injured or died as a result of my work in computational military reasoning. However, the most recent NHTSA data reports that there have been at least, “419 crashes [and]… 18 definite fatalities of autonomous self-driving vehicles (like Mr. Musk’s Teslas). So, clearly, in some circumstances AI can be dangerous. In all fairness, I should state that the reason the self-driving autonomous vehicles keep having fatal crashes isn’t technically the AI; it’s that the AI has imperfect information about the world in which it operates. The AI for self-driving vehicles gets that information from cameras and radar (LIDAR would be good, too). However, Telsa just removed the radar from it’s vehicles (“Elon Musk Overruled Tesla Engineers Who Said Removing Radar Would Be Problematic: Report,”) leaving the AI even more in the dark about the world in which it operates. So, is the AI at fault or corporate management? Maybe the problem isn’t AI.

Furthermore, most of what’s being sold to the public as AI are just some string manipulation parlor tricks tacked on to an internet search. ChatGPT-4, which is making all the headlines these days, was recently accurately described:

“Put simply, ChatGPT takes an initial prompt and determines – on an individual, word by word basis – what most often comes next based on the existing texts that it has scanned throughout the internet. In Wolfram’s words, “it’s just adding one word at a time” – but doing it so quickly that it seems as though a robot is writing an original, whole block of text.

Essentially, ChatGPT is a gigantic version of Google autocomplete.” – AI or BS? How to tell if a marketing tool really uses artificial intelligence

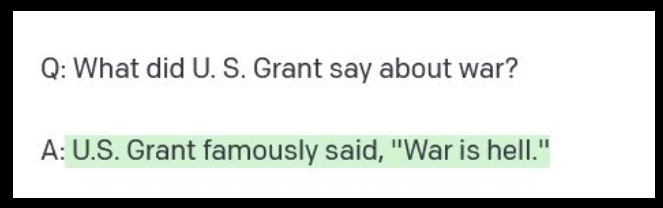

I recently asked ChatGPT for a quote from U. S. Grant about war and it responded:

Actually, it was W. T. Sherman who said, “War is hell.” But, ChatGPT has no real intelligence. How it erroneously linked Grant to the quote I have no idea. The greatest fear we should have of ChatGPT is incorrect citations in reference papers. The creators of ChatGPT have clearly traded accuracy for glitz and hype; it’s not even a good internet search engine, but it sure seems impressive!

Actually, it was W. T. Sherman who said, “War is hell.” But, ChatGPT has no real intelligence. How it erroneously linked Grant to the quote I have no idea. The greatest fear we should have of ChatGPT is incorrect citations in reference papers. The creators of ChatGPT have clearly traded accuracy for glitz and hype; it’s not even a good internet search engine, but it sure seems impressive!

There’s one more thing you should know. There are two kinds of machine learning: supervised and unsupervised. Probably >95% of machine learning programs are ‘supervised’; which means they are ‘trained’ on a data set. Whenever you see the words ‘training’ in reference to machine learning you know it’s supervised. Here’s an example of supervised machine learning: Netflix movie recommendations. Every time you select a movie on Netflix you are training their system on your likes and dislikes. It does a great job, doesn’t it? No, it does a terrible job. It once recommended Sound of Music to me because I watched Das Boot. Makes perfect sense. They both take place during WWII.

What I’m saying is that there is no ‘there’ there. There is no intelligence there. Somebody at Netflix (at one time I read they employed out of work screenwriters) tagged both Das Boot and Sound of Music with the same descriptor; presumably ‘WWII’ or ‘war movie’ and that was all that was necessary for Netflix to make a terrible suggestion.

I work in unsupervised machine learning. It doesn’t search the internet, or look for similar words in a big data base. It tries to make sense of the world in which it operates (a historic battlefield) and attempts to make optimal decisions for moving units based on math, geometry, trigonometry and boolean logic.

That’s AI. And it’s not dangerous. Autonomous self-driving cars? They’re dangerous.

References

| ↑1 | Though I have to admit losing $20 billion in a few months is impressive. |

|---|